Load balancing Microsoft Always On VPN (AOVPN)

Benefits of load balancing Microsoft Always On VPN

There are a number of important benefits to load balancing Microsoft Always On VPN, including:

- High Availability (HA): Load balancing eliminates the single point of failure that would exist with a single VPN server. By distributing connections across multiple Remote Access (VPN) servers and Network Policy Servers (NPS), if one server fails, the remaining active servers can automatically take over the workload. This ensures a reliable, “always-on” connection for end-users, even during planned maintenance or unexpected server issues, which is crucial for remote work.

- Scalability: Load balancing provides the ability to handle a growing number of remote users without service degradation. As your organization grows or if there are sudden spikes in remote access demand (e.g., during a business continuity event), you can easily add more VPN servers to the pool behind the load balancer. The load balancer intelligently distributes new connections across the expanded server infrastructure, allowing the VPN solution to scale to support thousands of concurrent users.

- Optimized performance: Load balancing ensures that no single VPN or NPS server becomes overwhelmed, leading to a better user experience. The load balancer uses algorithms (like round-robin or least connections) to spread the VPN tunnel connections and authentication requests across all available servers. By preventing resource exhaustion on any one server, it maintains stable and optimal connection speeds and overall throughput for all connected users. This prevents performance bottlenecks that can lead to slow connection speeds or dropped connections.

About Microsoft Always On VPN

Microsoft Always On VPN provides a single, cohesive solution for remote access and supports domain-joined, non domain-joined (workgroup), or Azure AD–joined devices, even personally owned devices.

With Always On VPN, the connection type does not have to be exclusively user or device but can be a combination of both. For example, you could enable device authentication for remote device management and then enable user authentication for connectivity to internal company sites and services.

Microsoft’s Enterprise solutions are at the heart of businesses everywhere. Loadbalancer.org is officially certified for all of Microsoft’s key applications which you can find here. More details on the Always On components, how it works, and prerequisites for load balancing can be found in our deployment guide, available to view below.

Why Loadbalancer.org for Microsoft Always On VPN?

Our load balancer consistently delivers peak performance in Always On VPN (AOVPN) environments and comes highly recommended by enterprise AOVPN expert, Richard Hicks:

The Loadbalancer.org appliance is an efficient, cost-effective, and easy-to-configure load-balancing solution that works well with Always On VPN implementations. It’s available as a physical or virtual appliance. There’s also a cloud-based version. It also includes advanced features such as TLS offload, web application firewall (WAF), global server load balancing (GSLB), and more. If you are looking for a layer 4-7 load balancer for Always On VPN and other workloads, be sure to check them out.

Endorsed by Richard Hicks, the enterprise AOVPN expert

“It’s even easier to set up than the Kemp, so I’ll recommend it to my customers going forward!”

We also offer a free and frequently cited comprehensive guide to setting up and using AOVPN to accompany the Microsoft AOVPN deployment guide attached below.

How to load balance Microsoft Always On VPN

For IKEv2, the load balancing method used must be transparent. This means that the client’s source IP address is retained through to the Real Servers. Transparency is required for IVEv2 because Windows limits the number of IPSec Security Associations (SAs) coming from a single IP address. If a non transparent method was used, the source IP address for all traffic reaching the IKEv2 servers would either be the VIP address or the load balancer’s own address, depending on the specific configuration.

Both Layer 4 DR mode and Layer 4 NAT mode are transparent and either can be used for IKEv2. When using DR mode, the “ARP problem” must be solved on all VPN Servers. For NAT mode, the default gateway for each VPN Server must be the load balancer.

For SSTP and NPS transparency is not required, although the load balancing method selected must support UDP. Therefore, whilst DR mode or NAT mode can be used, Layer 4 SNAT mode is a simpler option since it requires no mode-specific configuration changes to the Real Servers.

In the attached deployment guide below Layer 4 DR mode is used for IKEv2 and Layer 4 SNAT mode is used for SSTP and NPS.

Ports and services

| Port | Protocol | Use |

|---|---|---|

| 443 | TCP/HTTPS | All Always On VPN client to server SSTP communication |

| 500, 4500 | UDP/IKEv2 | IKEv2 communication |

| 1812, 1813 | UDP | Network policy server communication |

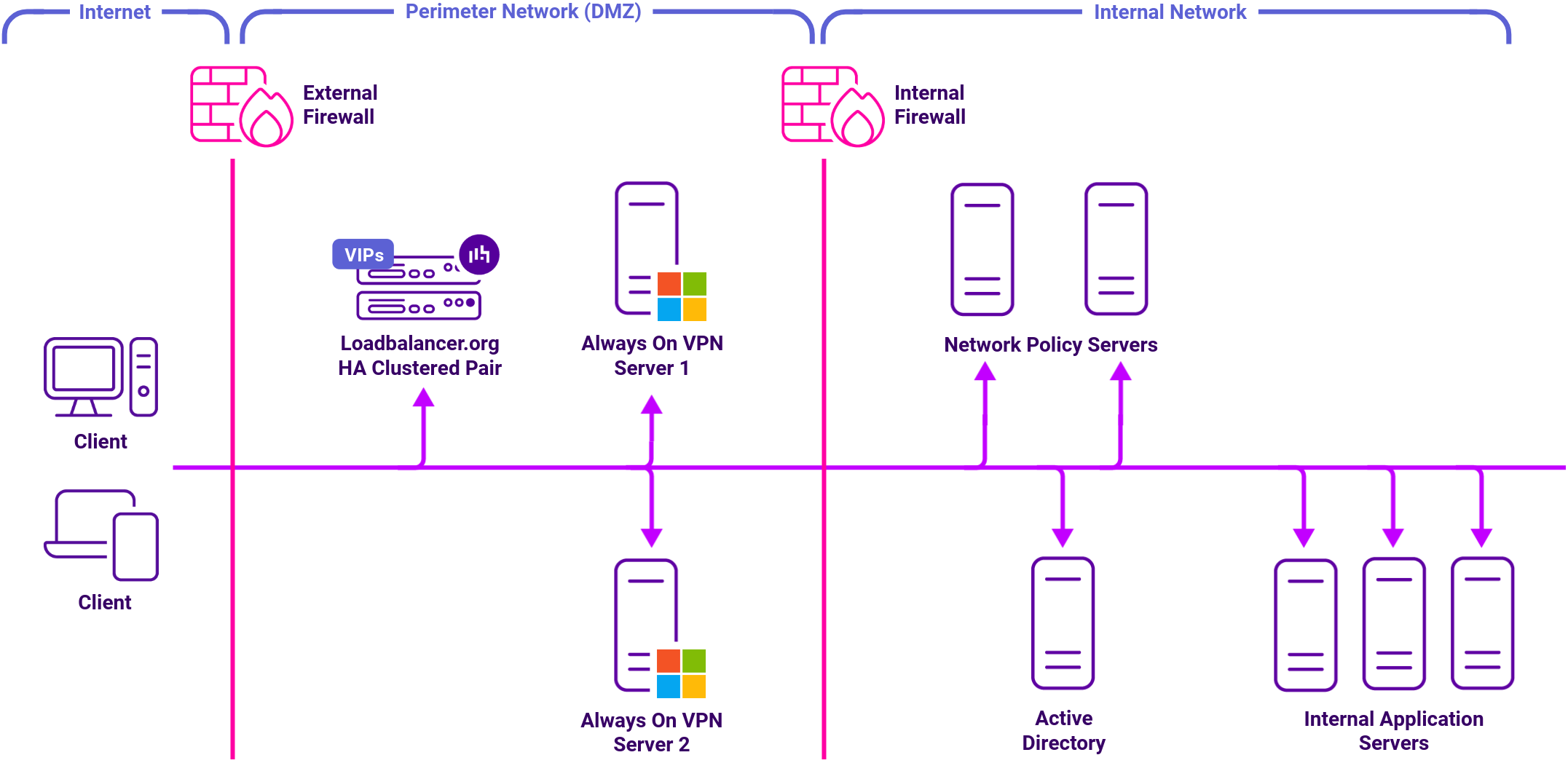

Load balancing deployment concept

Note

Load balancers can be deployed as single units or as a clustered pair. We recommend deploying a clustered pair for resilience and high availability.

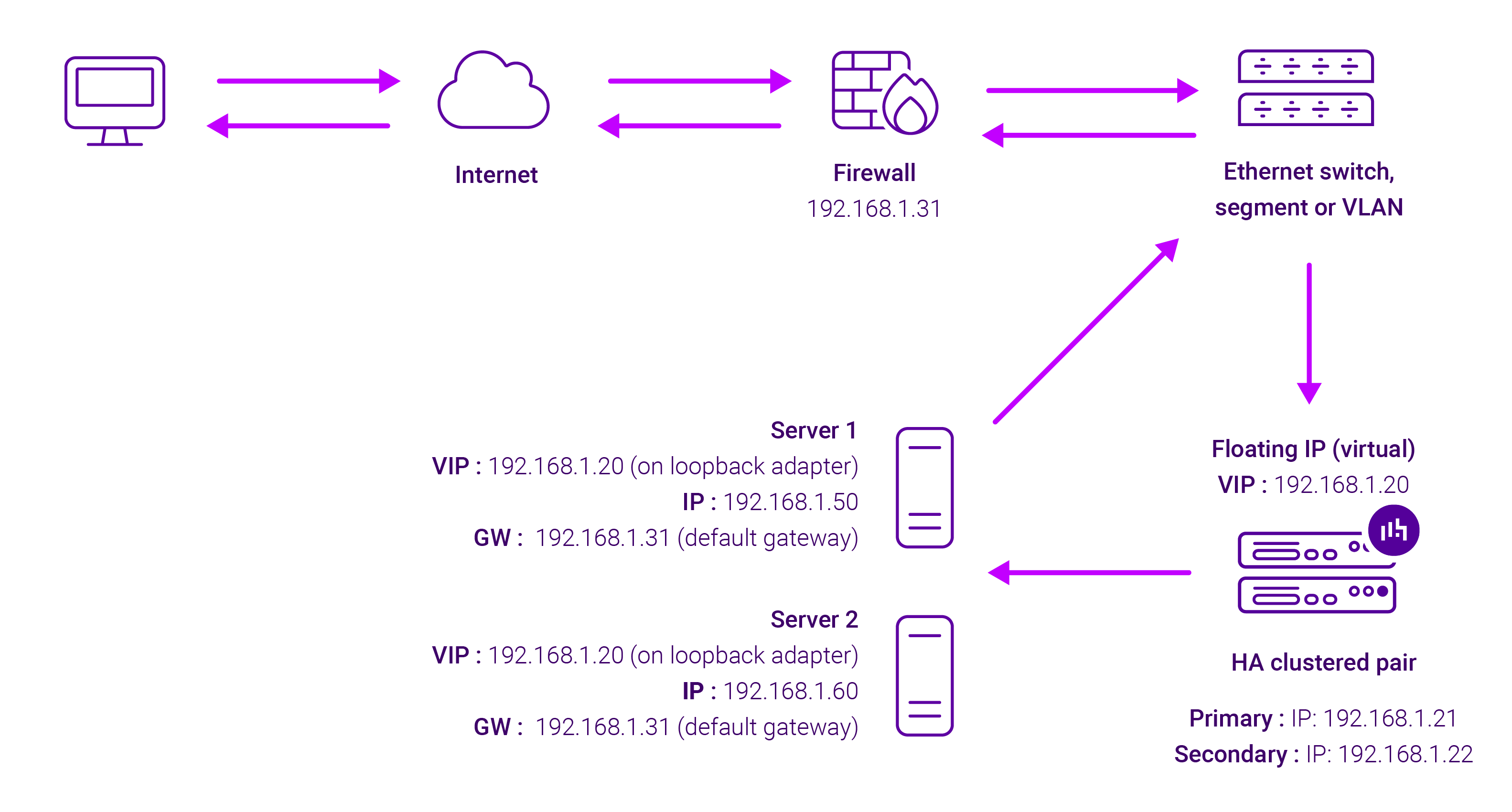

About Layer 4 DR mode load balancing

One-arm direct routing (DR) mode is a very high performance solution that requires little change to your existing infrastructure.

DR mode works by changing the destination MAC address of the incoming packet to match the selected Real Server on the fly which is very fast.

When the packet reaches the Real Server it expects the Real Server to own the Virtual Services IP address (VIP). This means that you need to ensure that the Real Server (and the load balanced application) respond to both the Real Server’s own IP address and the VIP.

The Real Servers should not respond to ARP requests for the VIP. Only the load balancer should do this. Configuring the Real Servers in this way is referred to as Solving the ARP problem.

On average, DR mode is 8 times quicker than NAT for HTTP, 50 times quicker for Terminal Services and much, much faster for streaming media or FTP.

The load balancer must have an Interface in the same subnet as the Real Servers to ensure Layer 2 connectivity required for DR mode to work.

The VIP can be brought up on the same subnet as the Real Servers, or on a different subnet provided that the load balancer has an interface in that subnet.

Port translation is not possible with DR mode, e.g. VIP:80 → RIP:8080 is not supported. DR mode is transparent, i.e. the Real Server will see the source IP address of the client.

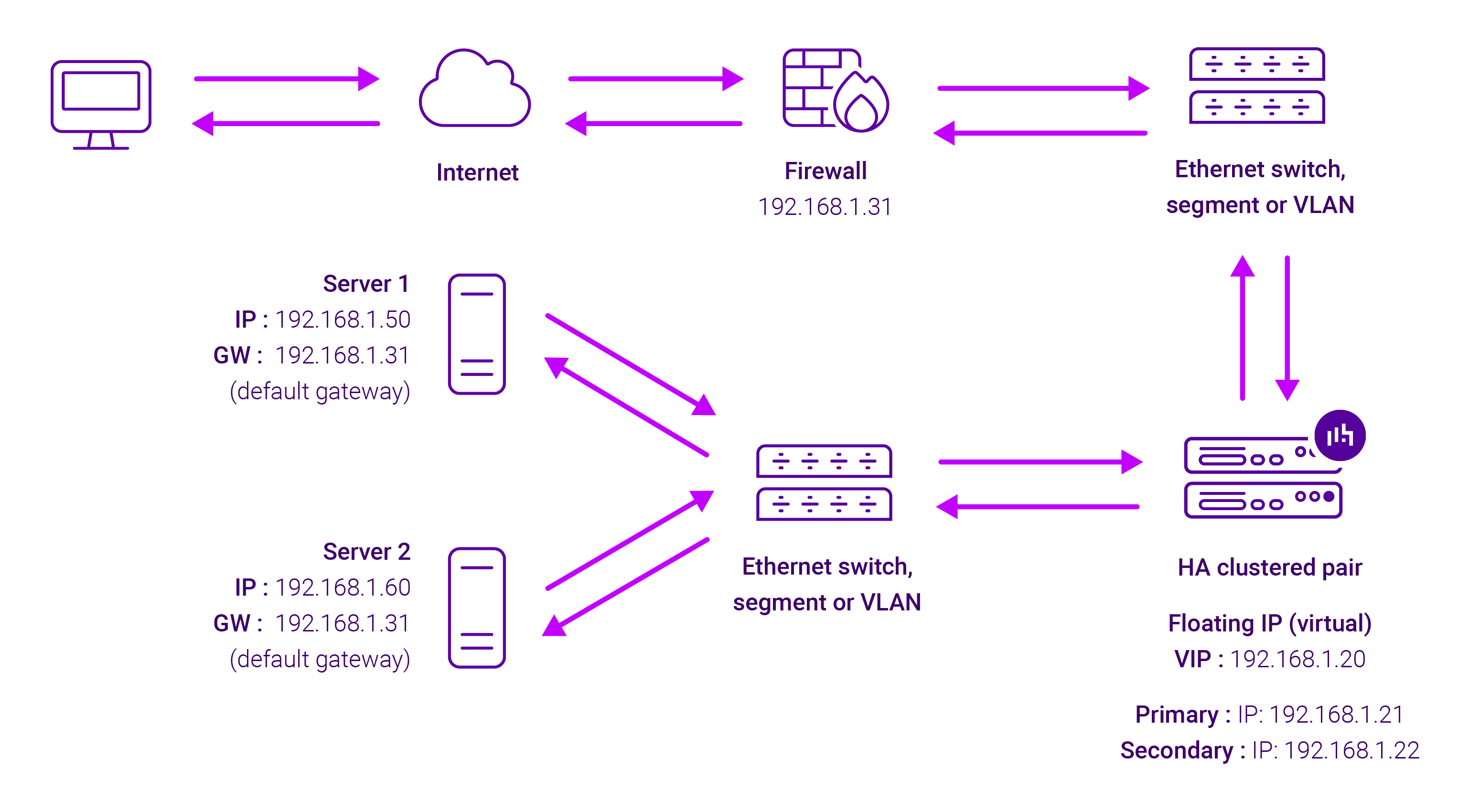

About Layer 4 SNAT mode load balancing

Layer 4 SNAT mode is a high performance solution, although not as fast as Layer 4 NAT mode or Layer 4 DR mode:

The load balancer translates all requests from the external Virtual Service to the internal Real Servers in the same way as NAT mode.

Layer 4 SNAT mode is not transparent, an iptables SNAT rule translates the source IP address to be the load balancer rather than the original client IP address. Layer 4 SNAT mode can be deployed using either a one-arm or two-arm configuration. For two-arm deployments, eth0 is normally used for the internal network and eth1 is used for the external network although this is not mandatory.

If the Real Servers require Internet access, Autonat should be enabled using the WebUI option: Cluster Configuration > Layer 4 – Advanced Configuration, the external interface should be selected.

Layer 4 SNAT requires no mode-specific configuration changes to the load balanced Real Servers. Port translation is possible with Layer 4 SNAT mode, e.g. VIP:80 → RIP:8080 is supported. You should not use the same RIP:PORT combination for Layer 4 SNAT mode VIPs and Layer 7 Reverse Proxy mode VIPs because the required firewall rules conflict.